commissioned and originally published by School Planning and Management,

a Peter Li Publication, December 1999

In the hit film The Matrix, members of a cyber underground can learn everything there is to know–from jujitsu to piloting a helicopter–by having it uploaded to their computerized consciousness. Mastery is attained in less time than it takes to read this sentence.

It’s an appealing fantasy, offering the promise of instant and painless expertise–and neatly sidestepping the nagging problem of motivation. The “magic helmet” represents man and machine merging in a way that makes the most of the strengths of both.

But this enduring science fiction motif also highlights the limitations of the human “data interface” on which education now depends–our five senses, principally sight, hearing, and touch, and the old-fashioned brains which monitor those “inputs.”

Compared with the speed at which information flows in the digital world, these old analog protocols–reading, listening, watching, doing–seem woefully inefficient.

None of the new technologies starting to reach the classroom are likely to make ninth grade English students faster readers, or speed the passage of a college biology class through the contents of a 700-page textbook–even if that material is now stored on a Web server and viewed on a satellite-cellular Palmpilot.

The greatest promise turn-of-the-millennium communications technology offers educators is in bringing the Universe and all we know about it to a student’s digital desktop, in vivid color and 3D sound. But for learning to take place, that information still has to pass through human eyes and ears to an engaged human brain.

That bottleneck must be addressed if we are to add much-needed breadth and depth to a general primary education without extending it well into adulthood.

What if instead of plugging in a smart desk, a personal comset, or a virtual reality headset, we could plug in the students themselves?

The nature of the mind remains perhaps the most intractable puzzle the human mind has confronted. Though the “Decade of the Brain” now concluding vastly expanded our understanding, the neurobiochemical processes which produce memory, cognition, recognition, and consciousness are still only dimly glimpsed.

Writing in a recent issue of Scientific American, neuroscientist Antonio R. Damasio described the state of his art as “quite incomplete, any way you slice it.” Nevertheless, Damasio is optimistic that by the middle of the next century, advances in neuroscience will erase the dualism of mind/brain, and give us the makings of a technology of thinking.

“A substantial explanation for the mind’s emergence from the brain will be produced and perhaps soon,” Damasio writes. “There is no reason to expect that neurobiology cannot bridge the gulf.”

Whether that emerging technology will allow for the “magic helmet” of science fiction will depend on what we learn about evolution’s most mysterious invention. It may be that there’s no way to “force-feed” a human brain which hasn’t already been discovered in a million years of parents teaching children. The senses may remain the only points of access to the mind.

But even if “jacking in” remains a fantasy, exhaustive knowledge of the brain as a bio-machine is almost certain to produce a powerful toolkit for optimizing its performance.

Whatever form it takes, this will almost certainly prove a mixed blessing. For, stripped of its cultural cloak, education is an attempt to manage the stimuli reaching the senses. And the dark lining is that the same technologies which present the mind with wonders can also be used to program it with lies.

Moreover, while some might see a prospect for the final democratization of education, with every parent–even every student–sculpting a unique curriculum, we must remind ourselves that mere access is not enough. Is a digital library of a ten million volumes any more valuable than a single paper book to someone who chooses not to read–or even to learn to read?

Our 20th Century experience with the primitive virtual realities we call video games raises a real prospect of entertaining ourselves into oblivion–the human equivalent of the rodent repeatedly pressing the button which sends an electrical charge into the pleasure center of its brain.

If access is all that mattered, the History Channel would be as popular as Pokemon.

It may be that the most valuable secret waiting to be unveiled by neuroscience is the secret of human curiosity–most especially how to repair it when it’s been abused.

If we don’t have the “magic helmet” by 2099, we will surely wish we did. For the safest prediction of all is that the explosion of human knowledge, invention, and creation in the 21st Century will dwarf that which we witnessed in the 20th. Lifelong education will be necessary, but not sufficient, in a future where the sum of all knowledge far exceeds the capacity of even our most gifted Renaissance men and women to absorb.

The real experts of the year 2099 will be those who are most skilled at framing questions and finding answers in a cross-cultural planetary library–an Encyclopedia Humana–that doubles in size every year. They will have at their fingertips the accumulated experience of billions of their brothers and sisters, and they will leverage that knowledge to accomplish the stuff of dreams.

Even so, I expect my great-great-grandchildren to discover two hoary old 20th Century truths are still holding fast: that there can be no learning without thinking, and no wisdom without time for reflection. And the great teachers, whether self-aware computer programs or self-aware flesh-and-blood, will be those who can help their students find reasons to pursue both.

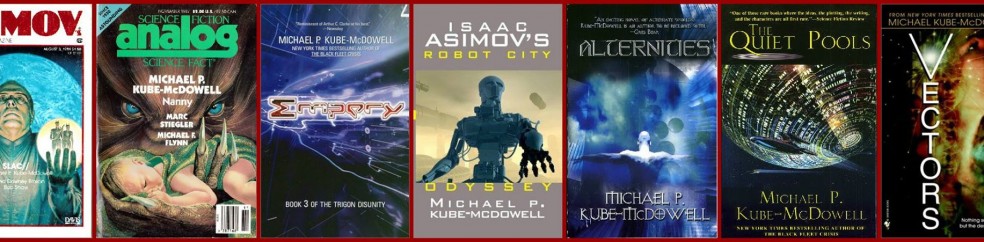

— Michael P. Kube-McDowell is a 1985 Presidential Scholar Distinguished Teacher and the co-author with Arthur C. Clarke of The Trigger (Bantam, 1999).

“Divining the Mind” received the 2000 EdPress Distinguished Achievement Award for Excellence in Educational Publishing in the Editorial category.